Why you need email A/B testing

Email A/B split testing is the best way to measurably demonstrate which email crusade presents to you the most achievement. It’s likewise the quickest method for sorting out what your crowd enjoys (and upgrade your email crusades as needs be).

To book genuine outcomes with your email crusades, you need to utilize A/B tests.

Not certain where to begin? You’re in good company.

Many email advertisers are curious about A/B testing. Now is the ideal time to eliminate the secret around A/B testing and show you that it is so natural to capitalize on your email promoting.

We’re going to show all of you about email A/B testing. From what it is, to how and when to utilize it and what to test. It’s simpler than you suspect. Hell, in the wake of perusing this guide you’ll ask why you haven’t done it previously.

Demystifying A/B Testing in Email Marketing (Don't Worry, It's Your Ally)

What’s A/B testing in email showcasing (and why you shouldn’t fear it)

Email A/B testing, otherwise called email split testing, is sending 2 distinct variants of your email to 2 different example gatherings of your email list. The email that gets the most opens and snaps (otherwise known as “the triumphant variant”) will be conveyed to your other supporters.

The vast majority skip email A/B testing in promotion in light of the fact that they don’t have any idea how or what to test. On the off chance that this is you, read on. It is simpler than you naturally suspect and you’ll find a tremendous chance to work on your missions.

A/B split testing is only an approach to assessing and looking at two things.

Smart marketers do this because they want to know:

- Which headline has the best open rates

- Regardless of whether their interest group is more attracted to emoticons

- Which button text makes individuals generally anxious to click

- What symbolism in your email drives better changes

- What preheader text creates the best open rate

- Etcetera there’s such a great amount to find!

With email showcasing A/B tests you can work on your measurements, increment transformations, get to understand your listeners’ perspective, and figure out what’s producing deals.

What’s more, the testing part itself is a breeze.

In your email showcasing device, you just set up 2 messages that are the very same with the exception of 1 variable, like an alternate headline. You then, at that point, send the 2 messages to a little example of your endorsers to see which email is more successful.

A big part of your experimental group gets Email An and the other half receives Email B. Not entirely settled by the thing you are attempting to quantify. For example, to know which rendition draws in additional individuals to open your messages, you utilize the open rate as your prosperity metric. Suppose Form B gets higher open rates. Then, at that point, it will be sent consequently to your other supporters since it genuinely performs better. It might actually turn into an email layout for future showcasing efforts!

How to set up your A/B test email campaign for accurate results

Setting up your A/B test email crusade is simple in Mailer Lite. Pick precisely everything you need to test, make 2 (or numerous) adaptations, pick your best example size for every variety, and off you go.

While the arrangement is direct, there are a couple of subtleties in each step that are essential to guarantee precise outcomes. We should investigate.

Decide which variable to test

At the point when you test 2 headlines, the open rate will show which one of your subjects pursued most to your endorsers. At the point when you test 2 distinct item pictures in your email format, you’ll need to take a gander at both the active clicking factor (and transformations).

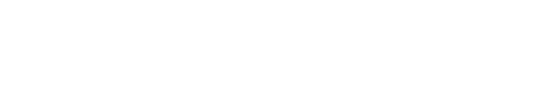

It can happen that two messages show various outcomes, contingent upon what you search for. In the email under, the plain-text variant had a superior open rate however when it came to individuals clicking, the plan layout was more effective.

Why? Since the plan variant contained the video as a GIF — which pulled in additional individuals to click.

How do you pick the correct sample size?

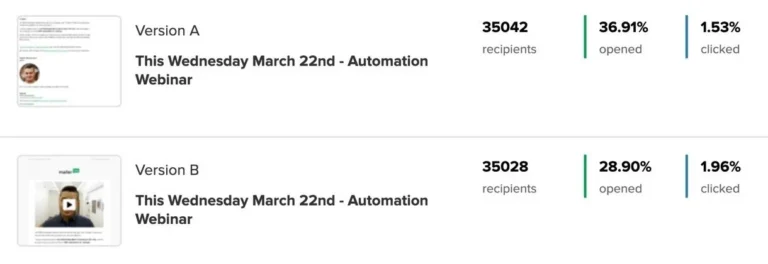

At the point when you have a major email list (north of 1000 endorsers), we prescribe adhering to the 80/20 rule (otherwise called the Pareto guideline).

Significance, center around the 20% that will bring you 80% of the outcomes. With regards to A/B tests, this implies sending one variation to 10% individuals, and the other 10% to variation B. Contingent upon which variation performed best, the remainder of the 80% will be shipped off the leftover gathering of supporters.

The justification for why we suggest this standard for greater records is on the grounds that you need all the more genuinely huge and exact outcomes. The 10% example size for every variation needs to contain an adequate number of endorsers to show which adaptation had more effect.

While you’re working with a more modest rundown of supporters, the level of endorsers that you need to A/B test will set progressively bigger for you to obtain genuinely huge outcomes. In the event that you have under 1,000 endorsers, you presumably need to test 80-95% and send the triumphant variant to just the little excess rate.

All things considered, in the event that 12 individuals click on a button in email An and 16 individuals do as such in choice B, you can’t actually tell which button performs better. Make your example size adequately huge to come by measurably critical outcomes.

Sample size calculator

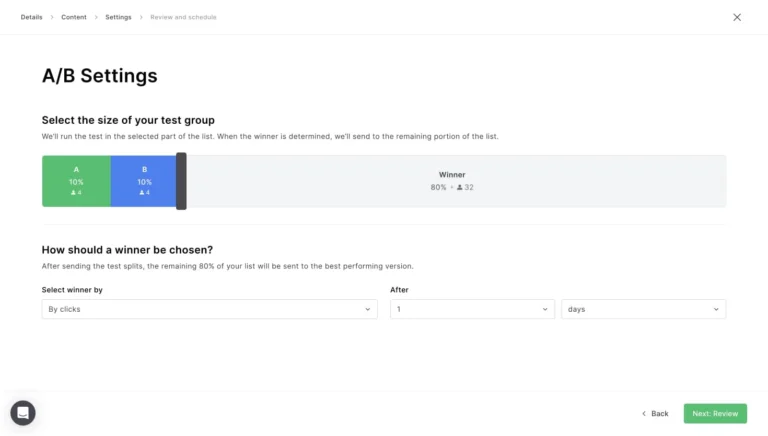

As displayed over, this number cruncher responds to the inquiry “What number of subjects are required for A/B test?”

In this aide, we will not delve into every one of the specialized subtleties, yet we will show you the primary concerns you really want to be aware of to have the option to comprehend how to utilize the number cruncher for your own A/B test.

Test size: This is the outcome we’re searching for while utilizing this mini-computer

Gauge change rate (BCR): This is your ongoing transformation rate

Least Distinguishable Impact (MDE): This is the littlest impact that will be recognized in your test

The MDE will altogether rely upon whether you need to have the option to identify either little or huge changes from your ongoing transformation rate. You’ll require less information (or a more modest example size) to identify enormous changes and more information if you have any desire to distinguish little changes.

To identify little changes, you really want to set the MDE lower (for instance on 1%).

To identify bigger changes, the MDE rate will be higher. However, be cautious and don’t set it excessively high. A higher MDE implies that you will not have the option to let know if your “A to B” change had an effect or not.

The mini-computer shows you how large your example ought to be. In the model over every variety ought to incorporate 1030 email endorsers.

When using the calculator, you have to keep in mind that it’s a tradeoff between 2 things:

- Test size: How much information you want to gather

- Measurable distinction: How well you need to have the option to observe whether bunch An or bunch B had improved results

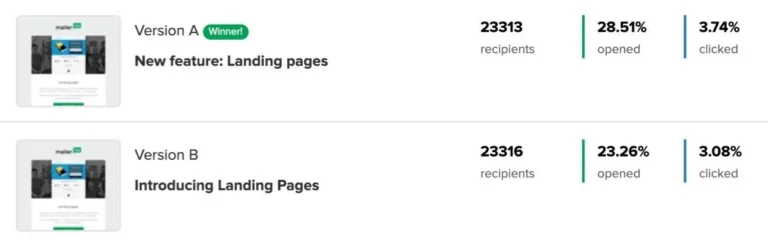

In the model underneath we ran A/B test where each gathering contained around 23,300 endorsers. You’ll see that variant A had a superior open rate. From this test, we’ve discovered that our clients respond better to the words “new component” versus “presenting.”

Timing window.

When do you ordinarily open an email? Your response is most likely: it depends.

You may be on the web, see the email coming in, and click in 5 minutes or less. Or on the other hand you could initially see the bulletin 2 hours after it got conveyed to your post box. Or on the other hand maybe the headline didn’t get you enough and you left the email unopened.

These are genuine situations, which is the reason you ought to make some satisfactory memories while running the A/B test.

While with factors like titles and opens you can send the champ as soon as 2 hours subsequent to sending, you should sit tight for a more extended time frame in the event that you’re estimating click-throughs. While you’re trying your pamphlet on dynamic endorsers, you can abbreviate the holding up time.

Research has shown that when you stand by 2 hours, the precision of the test will be around 80%. The more drawn out time you add to those hours, the more exact your outcomes will be. To hit an exactness of close to 100%, it’s ideal to hang tight for a whole day.

Know that a more extended holding up time isn’t better 100% of the time. A few pamphlets are time-touchy and ought to be sent quickly. In different circumstances, standing by too lengthy will bring about the triumphant email being sent at the end of the week. A work day versus a Saturday or Sunday can have a ton of effect on your email details (look at this article on the off chance that you’re pondering when is the best opportunity to send your email).

The primary rule with regards to characterizing the right send time improvement is: Each business is unique so it’s fundamental for screen your measurements and keep on testing.

Delivery time.

Remember that the triumphant email is naturally sent once the testing period is finished. As this gathering probably contains the most endorsers, it’s smart to plan the email robotization to contact these individuals.

Suppose you’re trying 2 headlines on 20% of your supporters (each gathering contains 10%). You believe the triumphant pamphlet should show up in individuals’ inboxes at 10 AM and you need to test the open rate for 2 hours.

This implies you need to begin your test at 8 AM, so your A/B test can run for 2 hours before the triumphant variation is conveyed at 10 AM.

Test only one variable at a time.

Envision you’re sending two messages simultaneously. The substance and the shipper’s name are indistinguishable. The main thing that contrasts is the title. Following a couple of hours, you see that variant A has a vastly improved open rate.

At the point when you just test 1 thing at a time and you see an unmistakable distinction in the measurement you’re breaking down, you can make an exact determination. In any case, assuming you had likewise changed the shipper’s name, it would be difficult to reason that the headline had a significant effect.

Example of an A/B split test

Might it be said that you are contemplating whether your openings will further develop by switching up the conversation line versus the preheader?

To figure out your triumphant combo, you ought to run two separate tests.

- In the first place, test two different titles with the equivalent preheader

- Lead a subsequent test utilizing the triumphant title with two unique preheader text

- Whenever you’ve tried the two factors all alone, you can consolidate the triumphant title and preheader for ideal outcomes

Conclusion

As you’ve realized, there isn’t anything strange or convoluted about A/B testing. Truth be told, email showcasing is a lot harder without A/B testing. Your missions won’t improve without realizing what works and what doesn’t.

It’s really simple to set up an A/B test. Begin little and take a stab at testing your headline to work on your open rates. When you get its hang, you can continue and attempt one of the A/B tests above.

The main testing thing about A/B testing is that you’re rarely truly finished. There is no limit to what can be tried and what information can be gathered from the test. On the off chance that something functions admirably in two or three A/B split tests, continue to make it happen, and continue on toward testing one more part of your email. Additionally recall, what works today won’t be guaranteed to work tomorrow.